Kubernetes is an open-source platform designed to automate deploying, scaling, and operating application containers. It’s a very powerful tool which allows us to declare how the infra should look like and the kubernetes will make sure that the infra is in the desired state. It has a large, rapidly growing ecosystem. Kubernetes services, support, and tools are widely available.

How Kubernetes Works

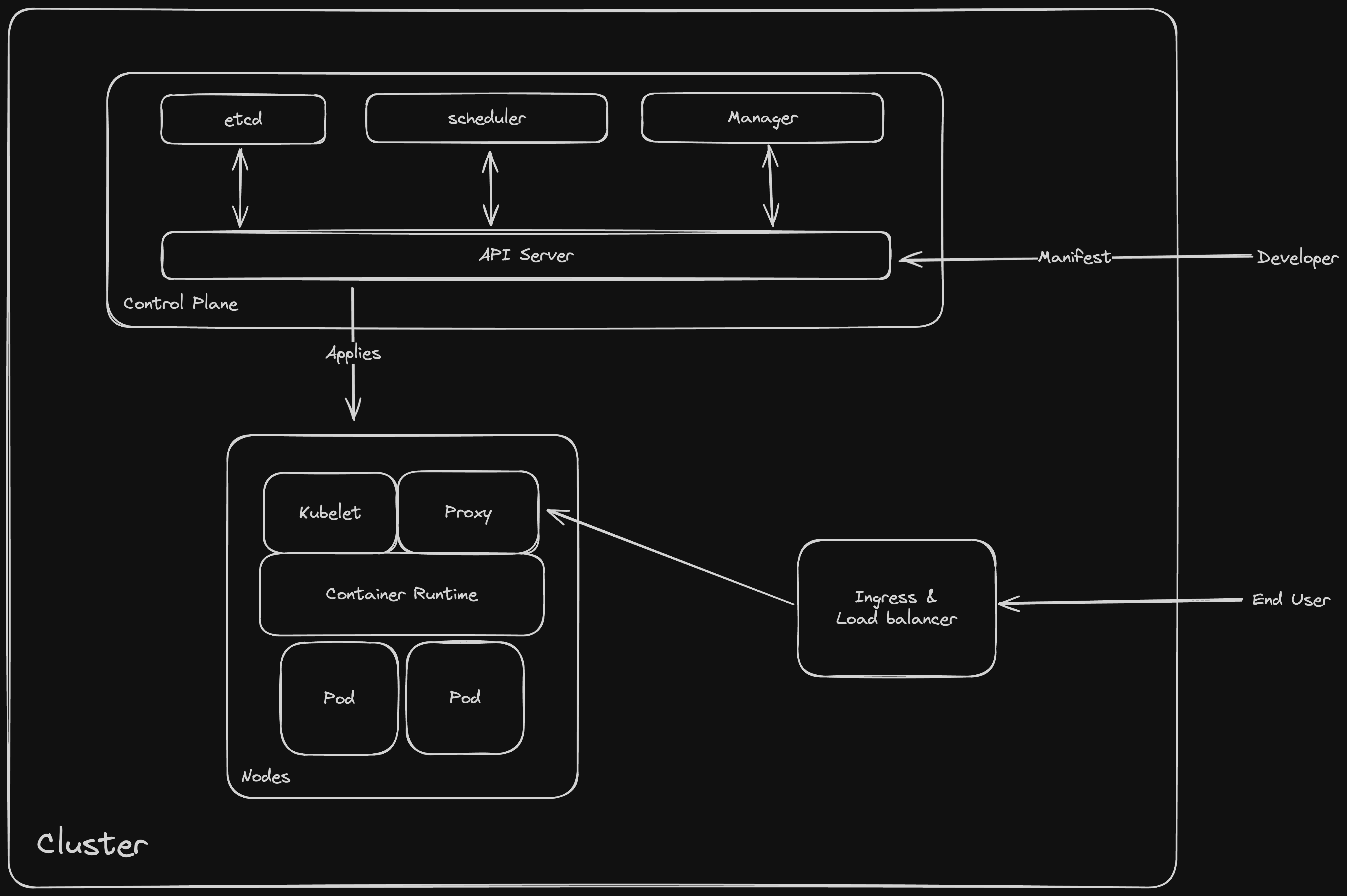

Kubernetes uses a declarative model, allowing users to specify their desired state for the deployments and services they run. The Kubernetes Control Plane takes these declarations and works to ensure that the current state matches the desired state. If a pod crashes, the controller notices the discrepancy and starts a new pod to maintain the desired state. This model enables scalability and reliability for containerized applications.

- Manifest: A YAML or JSON file that describes the desired state of the application, including the number of replicas, the container image to use, and the ports to expose.

- Cluster: A set of nodes (machienes) that run containerized applications managed by Kubernetes.

- Control Plane: The collection of processes that control Kubernetes nodes, including the scheduler, API server, and etcd storage.

- etcd: A highly available key-value store used by Kubernetes to store all data needed to manage the cluster.

- Scheduler: A control plane component that watches for newly created pods with no assigned node, and selects a node for them to run on.

- Manager: Typically refers to the Kubernetes controller manager, a component of the control plane that runs controller processes.

- API Server: The central management API, talks directly with the cluster’s shared state (etcd), serving the Kubernetes API to control the cluster.

- Node: A worker machine in The cluster that runs pods and is managed by the control plane.

- Kubelet: An agent that runs on each node in the cluster, ensuring that containers are running in a Pod.

- Proxy: Refers to kube-proxy, a network proxy that runs on each node in the cluster, maintaining network rules that allow network communication to your pods from network sessions.

- Container Runtime: The software that is responsible for running containers, such as Docker, containerd, or CRI-O.

- Pod: The smallest, most basic deployable object in Kubernetes, which represents a single instance of a running process in your cluster.

Sample Manifest

Here’s a basic example of a Kubernetes manifest file for a deployment:

apiVersion: apps/v1

kind: Deployment

metadata:

name: hello-world

spec:

replicas: 3

selector:

matchLabels:

app: hello-world

template:

metadata:

labels:

app: hello-world

spec:

containers:

- name: hello-world

image: "gcr.io/google-samples/hello-app:1.0"

ports:

- containerPort: 8080

This manifest describes a deployment “hello-world” that will have three replicas of the container running the “hello-app” image are running at all times. Each pod exposes port 8080.

- Deployment: A deployment represents replica-set, service, and pod.

- ReplicaSet: A replica set ensures that a specified number of pod replicas are running at any given time.

- Service: A service is an abstraction that defines a logical set of pods and a policy by which to access them.

- Pod: A pod is a group of one or more containers, with shared storage and network resources, and a specification for how to run the containers.

Other Main Components

Kubernetes clusters are made up of several key components that work together to provide a robust framework for deploying and managing applications. Here are the main parts:

Cluster

A Kubernetes cluster consists of at least one master node and multiple worker nodes. The master node hosts the Kubernetes Control Plane, which is responsible for managing the state of the cluster, while the worker nodes run the actual applications and workloads.

Namespace

Namespaces are a way to divide cluster resources. They provide a scope for names, and they are intended for use in environments with many users spread across multiple teams or projects.

Context

In Kubernetes, a context is used in the kubeconfig file to quickly switch between clusters and namespaces. It encapsulates the means to access the cluster’s API server, including the cluster name, user credentials, and namespace.

Network

Kubernetes networking addresses four main concerns: container-to-container communications, pod-to-pod communications, pod-to-service communications, and external-to-service communications. Kubernetes is designed with a flat network model to allow any pod to communicate with any other pod at any other node without NAT.

Ingress in Kubernetes

Ingress is an integral part of Kubernetes that manages external access to the services in a cluster, typically HTTP. Ingress can provide load balancing, SSL termination, and name-based virtual hosting. It’s a powerful tool for routing external traffic into the Kubernetes services.

How Ingress Works

An Ingress is configured to give services externally-reachable URLs, load balance traffic, terminate SSL/TLS, and offer name-based virtual hosting. Ingress exposes HTTP and HTTPS routes from outside the cluster to services within the cluster. Traffic routing is controlled by rules defined on the Ingress resource.

An Ingress controller is responsible for implementing the Ingress, usually with a load balancer, though it can also configure an edge router or additional frontends to help handle the traffic. Different Ingress controllers are available, such as Nginx, Traefik, and Istio, allowing users to choose the best fit for their specific needs.

Sample Ingress Manifest

Basic example of an Ingress manifest file. A service hello-world is exposed at http://hello-world.example.com.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: hello-world-ingress

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- host: hello-world.example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: hello-world

port:

number: 8080

This Ingress routes all traffic from http://hello-world.example.com to the hello-world service’s pods on port 8080.

Conclusion

Kubernetes is a powerful tool for managing containerized applications, providing efficiency, scalability, and reliability. We can use it to deploy applications on-premises, in the cloud, or in a hybrid environment, Kubernetes is a flexible platform to launch and manage the container workloads.

The ecosystem is also very vast with lots of features available. Although the learning curve can be steep since we need to understand many concepts about deployment patterns, networking etc… The features kubernetes offers are very useful in large scale applications where a lot of human errors can be avoided.

In my opinion, Declarative models usually force us to think about all the required aspects of something before we start. The mental block is only until you get familiar with the concepts. After understanding, We will enjoy the powerful features and the expressive power of declarative models.